The wake-up call

Imagine this:

- A principal’s draft speech with sensitive board strategy notes pops up in Google.

- A recruiter’s candidate evaluation (“not a team player”) becomes searchable.

- A student’s late-night vent about bullying leaks into public search results.

- A library user’s saved login details typed into ChatGPT appear in cached pages.

All because of one click: “Share link.”

This isn’t hypothetical. Some shared ChatGPT conversations have already been indexed by Google.

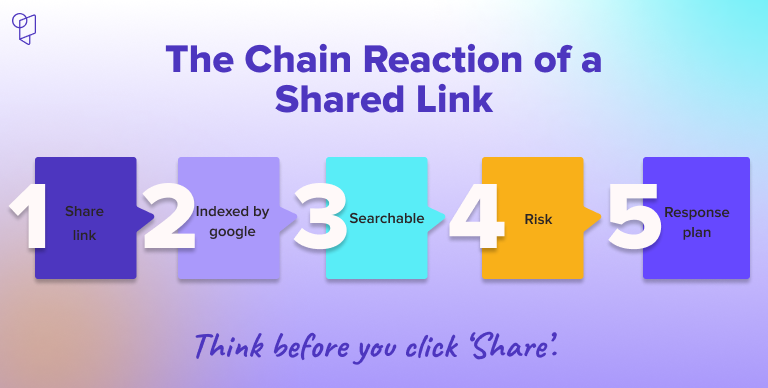

What actually happened (quick recap)

- Users who clicked “Share link” made chats public.

- Some of those links became searchable by Google.

- Private conversations were not exposed — but shared ones were.

👉 The real lesson? One small button can open a very big privacy door.

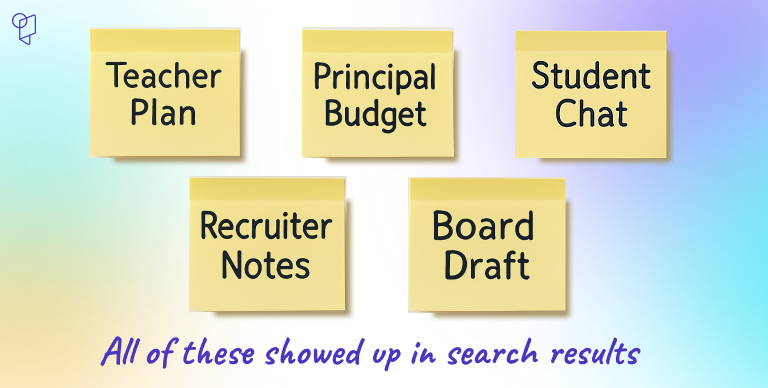

Who’s at risk? Real-world snippets

- Teacher: Shared a discipline plan with nicknames for “problem students.” Indexed. Parents found it.

- Principal: Drafted budget cut strategies in ChatGPT. Indexed. Local paper picked it up.

- Recruiter: Used ChatGPT to summarize confidential candidate feedback. Indexed. Candidate saw it.

- Board member: Brainstormed “layoffs vs. raises.” Indexed. Staff gossip exploded.

- Library: A student asked ChatGPT about depression. Indexed. Stranger found it.

What you can do right now

For schools, teachers, and coaches

- Assume all shared links are public.

Search with:

site:chat.openai.com “Your School Name”

site:chat.openai.com “Unique Phrase”

- Delete old shared links.

- Use Google’s Remove Outdated Content tool.

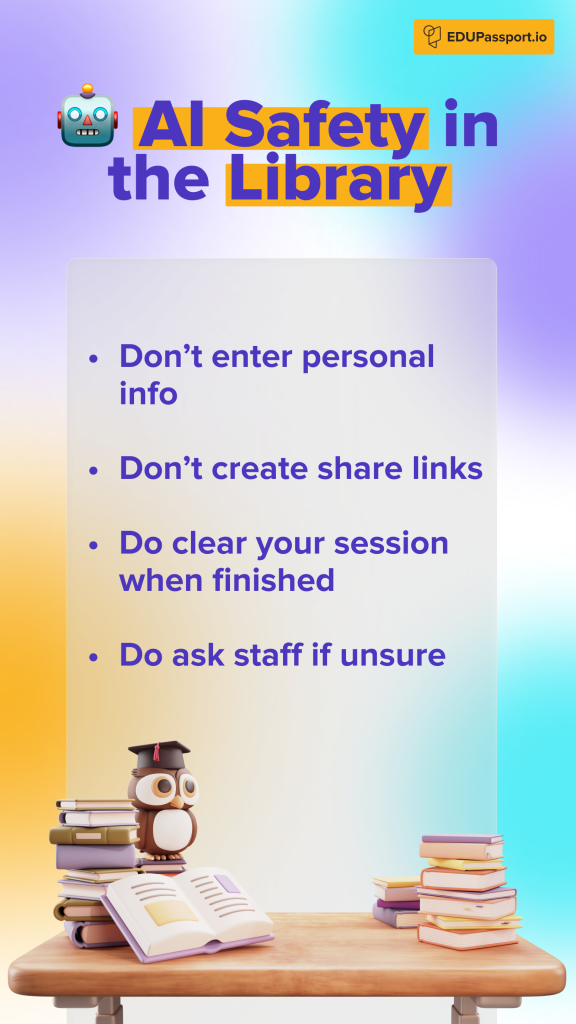

For libraries with many student users

- Post signage at PCs: “Treat every AI prompt as public.”

- Run 15-minute AI safety workshops.

- Clear cache + cookies automatically after sessions.

- Spot-check for open share links.

For recruiters, owners, and edtech businesses

- Ban sensitive data in AI prompts.

- Add AI-use rules to staff handbooks.

- Train HR + IT teams to “Locate → Delete → De-index → Notify.”

The taboo question: privacy vs. punishment

If a student’s AI-written essay leaks on Google, should schools punish… or protect?

- Punish: “You cheated with AI.”

- Protect: “You’re a child whose private chat leaked.”

- Globally, this raises questions of ethics, safety, and equity.

EDU Passport believes: schools must lead with safety, not surveillance.

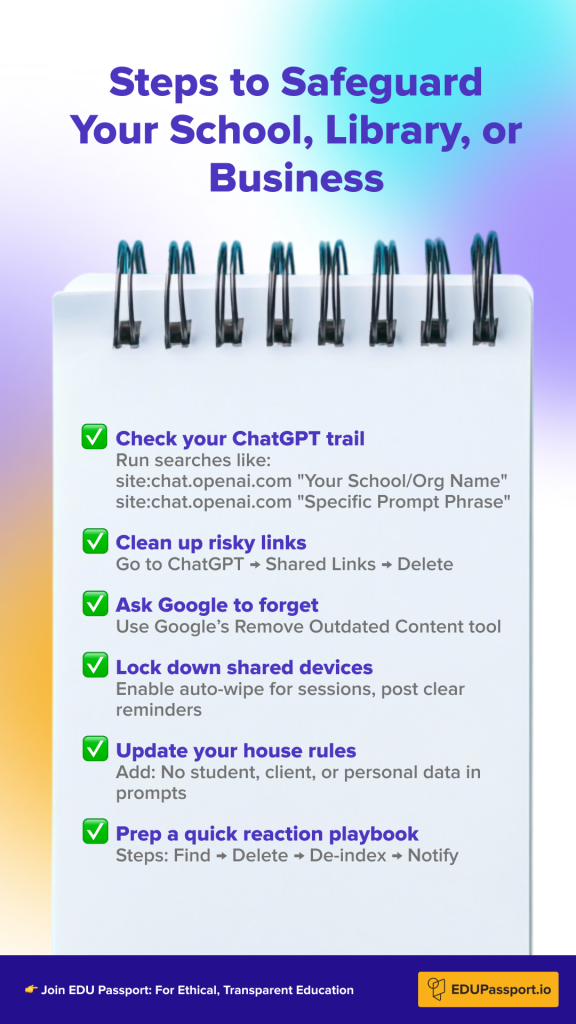

✅ Steps to Protect Your School, Library, or Business

- Audit your ChatGPT footprint

Search with:

site:chat.openai.com “Your School/Org Name”

site:chat.openai.com “Phrase from a prompt”

- Delete risky links

ChatGPT → “Shared Links” → Delete. - Request Google de-indexing

Google Remove Outdated Content Tool. - Secure shared devices

Auto-wipe sessions in libraries. Post reminders. - Update internal policies

Add a clause: No student, client, or personal data in AI prompts. - Create a rapid-response plan

Flow: Locate → Delete → De-index → Notify.

Practical inserts for your school

HR Clause (for staff handbook)

“Staff must not input student data, client records, or internal HR documents into AI systems. All AI use should be reviewed with the same care as public posting.”

Student Handbook Addition

“AI tools like ChatGPT are powerful but not private. Treat every chat as public. Never enter your name, health details, or personal issues into an AI prompt.”

Library Rules Printout

EDU Passport’s role

We don’t spin “takes.” We inform, equip, and connect. EDU Passport brings the education world to you: schools, libraries, recruiters, edtech, and educators worldwide.

Our mission: make you proactive, not reactive.

👉 Join our global community. Stay informed, stay safe, and stay ahead.